News 1

This Week in AI: Updates from OpenAI, Anthropic, JetBrains, Cursor, and Windsurf

The past week has been packed with AI news from major players. Software developers, DevOps engineers, and ML practitioners have a lot to digest – from cutting-edge model releases to new AI-powered coding tools. Below we break down the key developments from OpenAI, Anthropic, JetBrains, Cursor, and Windsurf, with a focus on practical impacts for developers.

OpenAI – New Models and Programs for Developers

OpenAI pushed several significant updates that developers will find interesting:

-

GPT-4.1 API Launch: OpenAI introduced GPT-4.1 in their API, along with smaller mini and nano variants. These models bring major improvements in coding assistance, instruction following, and long-context handling. GPT-4.1 supports context windows up to 1 million tokens, enabling it to ingest and work with extremely large codebases or documents. Notably, GPT-4.1 achieved a 54.6% score on a software engineering benchmark (SWE-bench), a 21.4% absolute improvement over the previous GPT-4o model – great news for developers looking for more capable code generation and analysis from the API. The mini model offers nearly half the latency and 83% lower cost while still outperforming older models, and even the tiny nano model (fastest & cheapest) can handle the full 1M-token context. This provides flexible options for integrating AI into applications depending on latency and cost needs.

-

OpenAI o3 and o4-mini in ChatGPT: In the ChatGPT lineup, OpenAI released o3 (a new high-performance model) and o4-mini (an optimized smaller model) as part of their “o-series.” These models are trained to “think for longer” before responding, improving reasoning quality. The o4-mini model is particularly noteworthy: it’s a cost-efficient model that still achieves strong performance in math, coding, and even some vision tasks. In fact, o4-mini outperforms larger predecessors on benchmarks like the AIME math competition, and its efficiency allows significantly higher usage limits for high-throughput applications. Both o3 and o4-mini are integrated with ChatGPT’s tool system and function calling, meaning they can intelligently decide when to use tools or APIs to solve problems – a capability that developers can leverage via the ChatGPT interface or API for more complex, multi-step tasks.

-

OpenAI Pioneers Program: OpenAI announced a new Pioneers Program aimed at startups and builders working on high-impact AI applications. The program will partner selected companies with OpenAI’s research team to create domain-specific evaluation benchmarks and fine-tuned models tailored to real-world use cases. This strategic initiative is meant to “advance the deployment of AI to real world use cases” by defining what good looks like in each industry and optimizing model performance for those domains. For developer teams in areas like healthcare, finance, or other verticals, this could provide early access to new model improvements and direct support in building AI solutions that meet specific needs.

Anthropic – Claude Evolves with Research Mode and Expanded Access

Anthropic’s Claude AI assistant gained new capabilities and plans that can boost developer productivity:

-

Claude “Research” Mode & Web Browsing: Anthropic introduced a powerful new Research feature for Claude, turning it into an agentic researcher. In this mode, Claude can conduct iterative web searches and internal document searches on your behalf, autonomously exploring a question step-by-step. It chains multiple searches, examines different angles, and provides thorough answers with inline citations for verification. For developers and engineers, this means Claude can now help investigate technical problems or gather documentation snippets from the web, saving you time on searching manuals or Stack Overflow. Anthropic highlights that this “delivers high-quality, comprehensive answers in minutes” with sources attached, which can be a huge time-saver when researching APIs, libraries, or debugging issues.

-

Google Workspace Integration: Alongside web research, Claude now integrates with Google Workspace apps like Gmail, Calendar, and Google Docs. With permission, Claude can pull in context from your emails, events, and documents to answer questions or generate output that’s aware of your work context. For example, a developer could ask Claude to summarize the latest error reports from a shared Google Doc or derive action items from a series of bug-triage emails. This integration means less manual copy-pasting of content into Claude – it can securely fetch relevant project data from your Google apps, then provide answers with citations pointing to the source email or doc. This is potentially very useful for DevOps and project managers who juggle information across repos, wikis, and emails.

-

“Max” Subscription Plan for Claude: Anthropic launched a new Claude Max Plan targeting power-users who work extensively with Claude. This plan offers up to 20× higher usage limits than the standard Pro plan, with tiers providing 5× or 20× more usage for $100 or $200 per month respectively. Essentially, it’s designed for devs or teams who rely on Claude throughout the day and were hitting the regular limits. Max users also get priority access to new features and models – useful if you want to be first in line for the latest Claude improvements. Anthropic describes the Max plan as ideal for extended coding sessions, large documents and data analysis, or any scenario where you need Claude available at every step without waiting. For developers using Claude to assist in programming or writing, this could unlock longer interactive debugging sessions or processing of very large code files. (Note: Claude’s context window is already very large, and Anthropic has been experimenting with up to 100K tokens or more in Claude 2).

-

Upcoming Developer Conference: (Heads-up for developers) Anthropic is also holding its first developer conference, “Code with Claude,” announced for April 3, 2025 . While this was just outside the past week, it underscores Anthropic’s outreach to the dev community. Expect discussions on using Claude for coding, their safety policies, and perhaps new tooling or API updates geared towards developers.

JetBrains – Smarter IDEs with AI Assistant and Junie Agent

JetBrains, known for IDEs like IntelliJ IDEA and PyCharm, made a big AI move this week by rolling out a unified AI experience in its tools:

-

All-in-One AI Assistant (Free Tier): JetBrains announced that all its various AI-driven features are now available under a single AI subscription, and importantly there’s a free tier. This means whether you use IntelliJ, WebStorm, PyCharm, or any JetBrains IDE, you can access the AI features consistently. The move is aimed at boosting developer productivity by integrating AI into development workflows seamlessly. Even developers who don’t pay can try out core AI assistance features for free, lowering the barrier to entry. JetBrains emphasized their mission to make devs more productive (something they’ve focused on for 25+ years) and see AI as a natural extension of that goal.

-

Introducing Junie – a Claude-Powered Coding Agent: A highlight of JetBrains’ update is Junie, a new AI coding agent built into their IDEs. Junie is described as a “coding agent” that can perform more autonomous tasks than a basic code completion tool. Under the hood, Junie is powered by Anthropic’s Claude model, giving it state-of-the-art coding capabilities. According to JetBrains, Junie can handle complex, real-world coding tasks and is designed to work 10 steps ahead of the developer. In practical terms, you might be able to ask Junie to implement a new module or refactor code across a project, and it will leverage Claude’s AI to do so contextually. JetBrains also partnered with OpenAI early on, and their Head of EMEA at OpenAI noted Junie will “unlock exciting new possibilities for developers globally” as part of JetBrains’ ongoing efforts to enhance developer productivity. This dual partnership (Anthropic and OpenAI) suggests JetBrains AI features might intelligently choose or blend models behind the scenes for the best results.

-

Enterprise-Grade and Infrastructure: JetBrains isn’t doing this alone – they highlighted partnerships with cloud providers as well. Google Cloud is collaborating to provide the infrastructure for JetBrains AI across three continents, ensuring low-latency and reliable performance inside the IDEs. For enterprise teams, JetBrains AI promises data privacy (code stays local unless you opt in to cloud AI features) and integration into existing workflows. All these AI features are built into the familiar JetBrains IDE interface, so developers don’t have to switch tools. This is a clear signal that mainstream development environments are embracing AI co-pilots deeply, and JetBrains is ensuring their implementation is robust enough for 11+ million developers who use their tools.

Cursor – AI Code Editor Adds GPT-4.1 and More Models

Cursor, the AI-driven code editor (from the startup AnySphere), rolled out updates bringing the latest AI models to its users:

-

Integration of GPT-4.1 (Free Preview): As soon as OpenAI released GPT-4.1, Cursor made it available in their editor. As of this week, users can enable GPT-4.1 in Cursor’s settings and try it out immediately. The Cursor team even made GPT-4.1 free to use (temporarily) so that developers can get a feel for its capabilities. This rapid integration is great for devs who want to experiment with GPT-4.1’s new 1M-token context and improved coding skills directly in their coding environment. It shows how competitive the AI coding assistant space is – Cursor is moving fast to support the latest and greatest models. They did note they’re monitoring the new model’s tool-use abilities and will share feedback with OpenAI, which implies Cursor might allow GPT-4.1 to use some “agent” features (like running code or searches within the editor).

-

New Model Options: xAI’s Grok 3: In addition to OpenAI models, Cursor added support for Grok 3 and Grok 3 Mini, which are large language models from xAI (the AI venture led by Elon Musk). Both Grok models can be used in Cursor’s “Agent mode,” meaning they can not only assist with code but also perform multi-step autonomous coding tasks (similar to GitHub Copilot Labs or ChatGPT plugins). Grok 3 is a premium model, while Grok 3 Mini is currently free for all users – making it the first free AI agent in Cursor. Developers can enable these in settings and even bring their own API key if they have one. This gives developers more flexibility; for example, you might use GPT-4.1 for its accuracy in complex tasks, but switch to the free Grok 3 Mini for quick iterative work or to avoid using up paid quotas. It’s also a notable collaboration, indicating Cursor’s platform is model-agnostic and keen to integrate new AI systems as they emerge.

-

Agent Mode and “Shadow Workspaces”: Cursor has been pioneering an Agent mode where the AI can actually execute code or make changes in a safe sandbox, helping with tasks like debugging or running tests. This week’s model additions (GPT-4.1 and Grok) both support Agent mode, meaning they can take actions like running the code you’re writing to verify outputs. Cursor’s blog discusses “shadow workspaces” – an approach where the AI works in a hidden copy of your project so it doesn’t mess up your files while iterating on a solution. For example, if you ask the AI to implement a new function, it could try writing and running it in the shadow workspace first. This is meant to let the AI safely attempt fixes or additions, and only apply changes when they’re verified (preventing chaotic edits). It’s an exciting development for those of us who want an AI pair programmer that can not only suggest code but also execute and test it in our editor. While still experimental, it hints at where dev tools are going: AI that can autonomously troubleshoot code, not just write it.

Windsurf (formerly Codeium) – New AI IDE and “Cascade” Agent

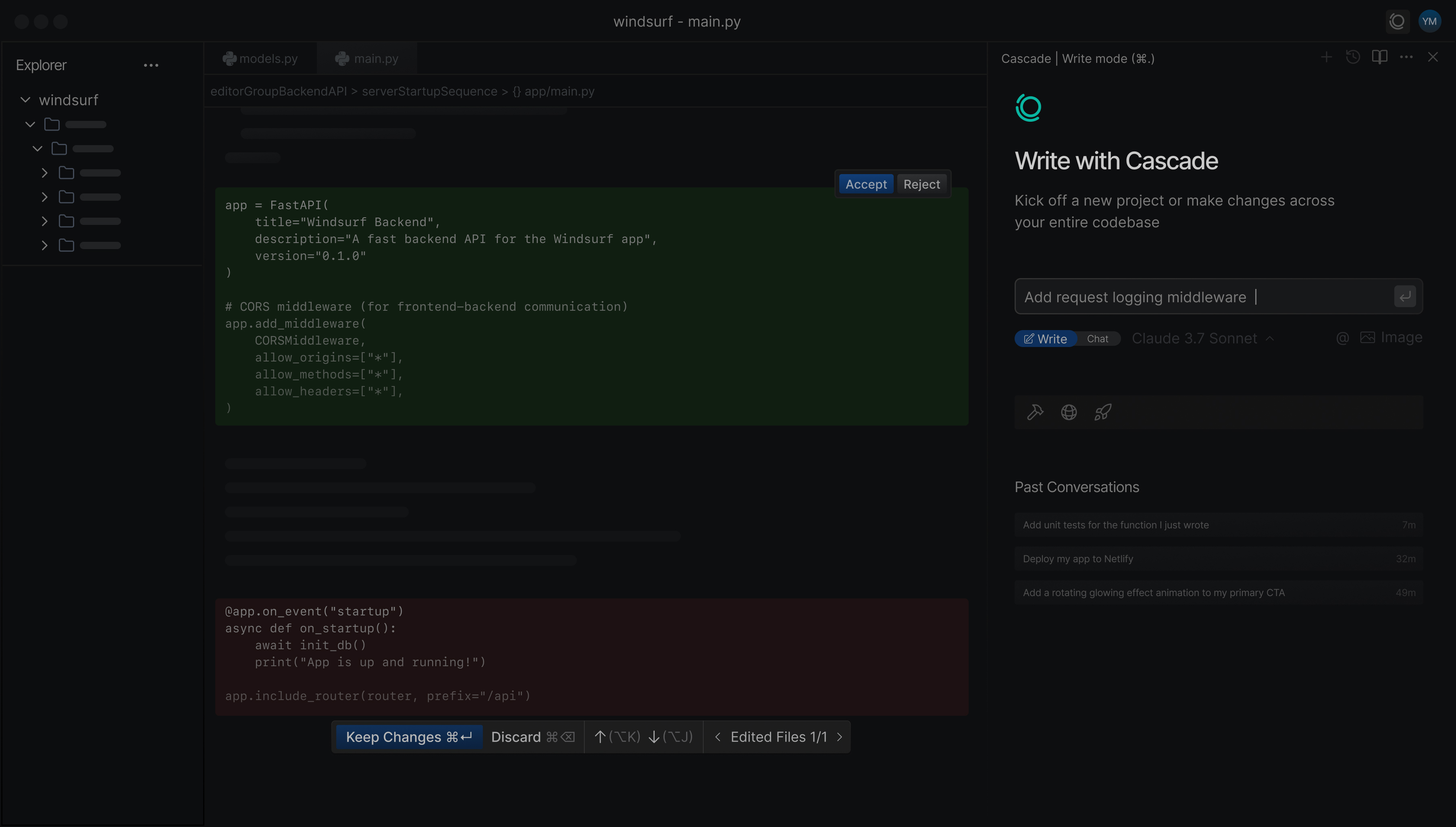

an AI-powered IDE (formerly Codeium). The right panel shows_ Cascade, the built-in AI coding agent, which can take requests (here adding a logging middleware) and keep track of past conversations. The IDE is designed to anticipate developer needs by applying changes and fixes pro-actively.

-

Codeium Rebrands to Windsurf: The popular AI coding assistant Codeium has rebranded itself as Windsurf. Along with the name change, the company introduced the Windsurf Editor, a standalone, purpose-built IDE aimed at maximizing “flow state” for developers. This marks a shift from Codeium being just a plugin to a full AI-native code editor (similar to what Cursor is doing). The rebrand and new editor were announced in early April, and over the past week Windsurf has been rolling out updates (dubbed “Waves”) to improve the editor.

-

Launch of Windsurf Editor with Cascade Agent: The Windsurf Editor is an AI-first development environment that comes with an AI agent called Cascade baked in. Cascade is described as “an agent that codes, fixes and thinks 10 steps ahead”. In practice, Cascade watches what you’re doing and can execute tasks proactively. For example, Windsurf touts that the editor will start fixing test failures before you even write the test, and resolving issues before they surface. It keeps track of your command history, file changes, and even clipboard to give contextual suggestions (via a feature called Windsurf Tab). For developers, this means the IDE might auto-suggest creating a function based on a failing test, or automatically apply a common fix when it detects an error pattern – almost like having an junior developer or pair programmer constantly looking over your shoulder in a helpful way.

-

Key Features and Integrations: Windsurf’s Cascade agent is designed with memory and tool integrations. It has “Memories” to remember important details about your codebase (e.g. conventions, past decisions) and MCP (Model-Connector-Plugin) Support to hook into external tools. From the editor, you can connect Cascade to services like Figma, Slack, Stripe APIs, databases, etc., via one-click “server” plugins. This is akin to giving your AI agent access to third-party tools – for instance, it could fetch design specs from Figma or query a test database while coding. Such integrations hint at a future where your IDE’s AI can perform end-to-end tasks (like building a UI from a Figma design, or generating CRUD code after introspecting a database schema). Windsurf is also targeting enterprise readiness: they claim 40–200% increases in developer productivity at companies using it and have over 1,000 enterprise customers testing it. For individual devs, the Windsurf Editor is available to download and try, offering a new alternative to VS Code or JetBrains for those who want an AI-augmented coding experience.

-

Continuous Updates (Wave 6, 7): The team has been iterating fast – they released Wave 6 and Wave 7 updates in early April with improvements to the Windsurf Editor. While details are behind the scenes, these likely include UI/UX tweaks and enhanced AI capabilities based on user feedback from the beta. The fact that they are naming updates in “waves” suggests a frequent release cycle. Developers can expect a stream of new features or model upgrades in Windsurf on almost a weekly basis. With Codeium’s proven track record (as a top open-source Copilot alternative) and the new direction as Windsurf, this is a space to watch. It underscores a trend: AI isn’t just an add-on to existing IDEs – we’re seeing full-fledged IDEs built around AI.

Key Takeaways for Developers

-

The AI coding assistant landscape is evolving rapidly. Both established firms (JetBrains, OpenAI, Anthropic) and startups (Cursor, Windsurf) are delivering new tools and models at a blistering pace. For developers, this means more choice and rapidly improving capabilities for AI-assisted development.

-

New LLM models (OpenAI GPT-4.1, Anthropic Claude 3.7, etc.) are bringing better code understanding, larger context windows, and more reliable tool use. These improvements directly benefit coding tasks – e.g., handling bigger projects in one go, following complex instructions, or automating multi-step workflows. If you’re using AI for code, keep an eye on model updates and experiment with the options as they become available (as Cursor did with allowing GPT-4.1 immediately).

-

IDE Integration is deepening. JetBrains integrating AI across all products and Windsurf launching an AI-centric editor show that your coding environment itself is becoming AI-enhanced. Unlike earlier “AI pair programmers” that just completed lines of code, the new wave (Junie, Cascade, Cursor’s agent mode) can understand broader context, run tests, search documentation, and make larger structural changes. It’s worth trying these tools on a small project to see how they can fit your workflow – you may find that routine tasks like writing boilerplate, commenting code, or updating dependencies can be offloaded to the AI, letting you focus on design and logic.

-

Privacy and control remain considerations. Tools like Cursor and Windsurf are offering features like Privacy Mode or on-prem installs (for enterprise) to ensure code stays private. As a developer or team adopting these, you should review how your code data is used. Many solutions let you opt out of sharing data or run locally-hosted versions of models if needed. Given the productivity boost, finding a comfortable setup (perhaps using local models for proprietary code and cloud models for open-source or non-sensitive tasks) could be a good compromise.

-

Learning curve and productivity: Embracing these AI features might require some learning and adjustment in how you work. For example, writing good prompts becomes a valuable skill when asking Claude’s Research mode to investigate something, or when instructing Junie to generate a module. Investing a bit of time to read the docs or watch demos for these tools can pay off. For instance, knowing that Claude’s workspace integration can pull your calendar means you can ask more context-rich questions like “Draft release notes for all features completed this sprint” and get a decent first draft. The tools are there to reduce toil – but you get the most out of them when you understand their capabilities and limits.

This week’s developments make one thing clear: AI is becoming an indispensable part of software development. Whether it’s through smarter IDEs, more powerful API models, or agent-driven coding assistants, developers who leverage these updates can gain a significant edge in productivity. It’s an exciting (and rapidly changing) time to be coding. Stay curious and keep experimenting with these new tools – and we’ll keep you updated as the AI frontier advances!

Sources:

-

OpenAI – GPT-4.1 and model releases

-

OpenAI – o3 & o4-mini details

-

OpenAI – Pioneers Program announcement

-

Anthropic – Claude “Research” mode and Workspace integration

-

Anthropic – Claude Max plan details

-

JetBrains – AI Assistant and Junie agent announcement

-

Cursor – GPT-4.1 integration; Grok model integration

-

Windsurf – Codeium rebrand and Editor launch